Google Cloud Scheduler HTTP Jobs

Using Google Cloud Scheduler HTTP Jobs

In the process of migration of a legacy web application to serverless environment, our team had to tackle, among others, the problem of cron jobs. The legacy app is a classic web application built on LAMP stack. It does a lot of things with CLI scripts run by [crond](https://en.wikipedia.org/wiki/Cron. The new architecture supposes we use Cloud Run for the web workloads. However, this solution could not handle cron jobs, so we faced a choice: either run another instance of the same app on a Compute VM with cron daemon, or to come up with another solution. Another instance of the app would complicate our deployment procedure, also it felt like a compromise or shortcut. As you might’ve guessed from the title of this post, we began to look into Google Cloud Scheduler.

The scheduler itself kind of a cron daemon for the cloud. You specify schedule, the scheduler triggers jobs accoriding to that schedule. There are three types of jobs:

- App Engine

- Cloud Pub/Sub

- HTTP

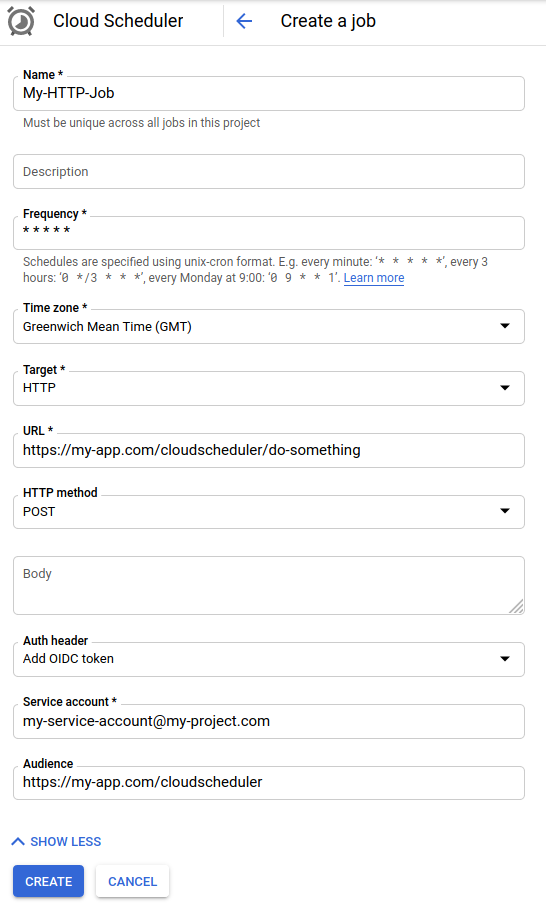

We don’t use App Engine. Pub/Sub would again require a worker running that would subscribe to Pub/Sub channel and trigger jobs as they’re coming. Essentially, for us it would be pretty much the same as a Compute VM with cron daemon, even worse because with cron daemon we would not have to touch the app code at all, and Pub/Sub would require to implement the subscriber. But there’s the third option, HTTP. You provide an arbitrary URL and specify how the HTTP request is sent:

Obviously, you don’t want random internet surfers to trigger your cron jobs, thus there must be some

authentication. Luckily, Cloud Scheduler has got you covered here. When a job is triggered and HTTP request is sent,

it also sends Authorization HTTP header with OIDC token.

Our app is written in PHP, and here’s how you can check the auth token in your controllers:

use Firebase\JWT\JWK;

use Firebase\JWT\JWT;

$jwksJson = file_get_contents("https://www.googleapis.com/oauth2/v3/certs");

if (!$jwksJson) {

throw new \Exception("Access denied (JWKS inaccessible)");

}

$jwks = json_decode($jwksJson, true);

if (!$jwks) {

throw new \Exception("Access denied (JWKS json_decode error)");

}

$authHeader = $_SERVER['HTTP_AUTHORIZATION'] ?? "";

if ("Bearer" !== substr($authHeader, 0, 6)) {

throw new \Exception("Access denied (No Bearer token)");

}

$jwt = trim(substr($authHeader, 6));

if (!$jwt) {

throw new \Exception("Access denied (Bearer token empty)");

}

// JWT::decode throws when something goes wrong

$decoded = JWT::decode($jwt, JWK::parseKeySet($jwks), ["RS256"]);

// further on, you may (and should) check the decoded token to contain valid "audience" field

This code makes use of the firebase/php-jwt composer package.

For many, running cron jobs by triggering HTTP requests looks like a hacky/shortcut solution. Indeed, for a more complicated architecture this would probably not be enough. In a more complicated architecture you probably have to deal with Pub/Sub anyway and somehow solve the infrastructure problems - then Scheduler with Pub/Sub jobs would probably be my take. More complicated architecture would require more complicated solutions. However, in this case, we wanted to keep it simple, and the described approach does exactly that. It’s simple, reliable, very maintainable, keeps track of all the cron jobs status (right there in GCP there’s a full log).

What else to say here? For the cron jobs, you often need significantly more time then for regular web requests. We set request timeout of 15m for our Cloud Run service:

gcloud run deploy legacy [.. other options ..] --timeout=15m

In PHP we also set the same time limit

for HTTP endpoints that serve Scheduler jobs. There’s also another limit that Scheduler uses: max time to wait for

a response from an HTTP endpoint, it’s called --attempt-deadline and is set to 3 minutes by default.

If your endpoint doesn’t produce any response within this timeframe, the job itself will, of course, continue

execution but Scheduler will mark the attempt as unsuccessful, so it’s important for you to set up adequate

limits - otherwise your scheduler logs will not give you a valid insight about how your cron jobs behave.

In conclusion, I can say that smaller and pure web applications with simple architecture may benefit from cron jobs being triggered from Cloud Scheduler instead of running a cron daemon.

Another day, another post, this time about Google Cloud Scheduler HTTP jobs: https://t.co/xRpx16dxTE

— Victor Bolshov (@crocodile2u) November 29, 2020